Sebastian Raschka

last updated: 05/04/2014

All code was executed in Python 3.4

++I am really looking forward to your comments and suggestions to improve and

extend this little collection! Just send me a quick note

via Twitter: @rasbt

or Email: +

+

Implementing the least squares fit method for linear regression and speeding it up via Cython

+Sections

+Introduction

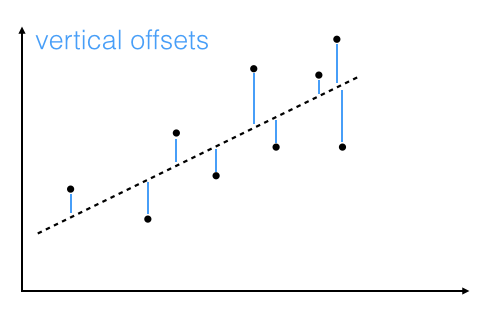

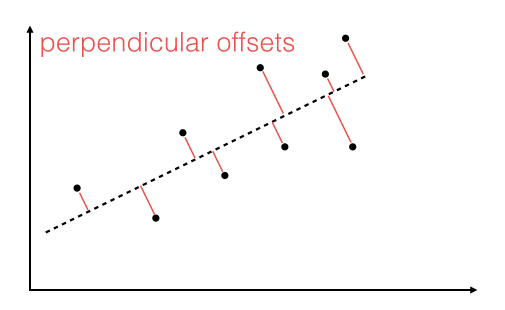

+Linear regression via the least squares method is the simplest approach to performing a regression analysis of a dependent and a explanatory variable. The objective is to find the best-fitting straight line through a set of points that minimizes the sum of the squared offsets from the line.

The offsets come in 2 different flavors: perpendicular and vertical - with respect to the line.

Here, we will use the more common approach: minimizing the sum of the perpendicular offsets.

+In more mathematical terms, our goal is to compute the best fit to n points \((x_i, y_i)\) with \(i=1,2,...n,\) via linear equation of the form

\(f(x) = a\cdot x + b\).

Here, we assume that the y-component is functionally dependent on the x-component.

In a cartesian coordinate system, \(b\) is the intercept of the straight line with the y-axis, and \(a\) is the slope of this line.

In order to obtain the parameters for the linear regression line for a set of multiple points, we can re-write the problem as matrix equation

\(\pmb X \; \pmb a = \pmb y\)

\(\Rightarrow\Bigg[ \begin{array}{cc} x_1 & 1 \\ ... & 1 \\ x_n & 1 \end{array} \Bigg]\) \(\bigg[ \begin{array}{c} a \\ b \end{array} \bigg]\) \(=\Bigg[ \begin{array}{c} y_1 \\ ... \\ y_n \end{array} \Bigg]\)

+With a little bit of calculus, we can rearrange the term in order to obtain the parameter vector \(\pmb a = [a\;b]^T\)

+\(\Rightarrow \pmb a = (\pmb X^T \; \pmb X)^{-1} \pmb X^T \; \pmb y\)

+

The more classic approach to obtain the slope parameter \(a\) and y-axis intercept \(b\) would be:

\(a = \frac{S_{x,y}}{\sigma_{x}^{2}}\quad\) (slope)

+\(b = \bar{y} - a\bar{x}\quad\) (y-axis intercept)

+where

+\(S_{xy} = \sum_{i=1}^{n} (x_i - \bar{x})(y_i - \bar{y})\quad\) (covariance)

+\(\sigma{_x}^{2} = \sum_{i=1}^{n} (x_i - \bar{x})^2\quad\) (variance)

+Least squares fit implementations

+

1. The matrix approach

+First, let us implement the equation:

+\(\pmb a = (\pmb X^T \; \pmb X)^{-1} \pmb X^T \; \pmb y\)

+which I will refer to as the "matrix approach".

+import numpy as np

+

+def lin_lstsqr_mat(x, y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ X = np.vstack([x, np.ones(len(x))]).T

+ return (np.linalg.inv(X.T.dot(X)).dot(X.T)).dot(y)

+

2. The classic approach

+Next, we will calculate the parameters separately, using only standard library functions in Python, which I will call the "classic approach".

+\(a = \frac{S_{x,y}}{\sigma_{x}^{2}}\quad\) (slope)

+\(b = \bar{y} - a\bar{x}\quad\) (y-axis intercept)

+def classic_lstsqr(x, y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ x_avg = sum(x)/len(x)

+ y_avg = sum(y)/len(y)

+ var_x = sum([(x_i - x_avg)**2 for x_i in x])

+ cov_xy = sum([(x_i - x_avg)*(y_i - y_avg) for x_i,y_i in zip(x,y)])

+ slope = cov_xy / var_x

+ y_interc = y_avg - slope*x_avg

+ return (slope, y_interc)

+

3. Using the lstsq numpy function

+For our convenience, numpy has a function that can also compute the leat squares solution of a linear matrix equation. For more information, please refer to the documentation.

def numpy_lstsqr(x, y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ X = np.vstack([x, np.ones(len(x))]).T

+ return np.linalg.lstsq(X,y)[0]

+

4. Using the linregress scipy function

+The last approach is using scipy.stats.linregress(), which returns a tuple of 5 different attributes, where the 1st value in the tuple is the slope, and the second value is the y-axis intercept, respectively. The documentation for this function can be found here.

import scipy.stats

+

+def scipy_lstsqr(x,y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ return scipy.stats.linregress(x, y)[0:2]

+Generating sample data and benchmarking

+In order to test our different least squares fit implementation, we will generate some sample data: - 500 sample points for the x-component within the range [0,500)

- 500 sample points for the y-component within the range [100,600)

where each sample point is multiplied by a random value within the range [0.8, 12).

+import random

+random.seed(12345)

+

+x = [x_i*random.randrange(8,12)/10 for x_i in range(500)]

+y = [y_i*random.randrange(8,12)/10 for y_i in range(100,600)]

+

Visualization

+To check how our dataset is distributed, and how the straight line, which we obtain via the least square fit method, we will plot it in a scatter plot.

Note that we are using our "matrix approach" here for simplicity, but after plotting the data, we will check whether all of the four different implementations yield the same parameters.

%pylab inline

+from matplotlib import pyplot as plt

+

+slope, intercept = lin_lstsqr_mat(x, y)

+

+line_x = [round(min(x)) - 1, round(max(x)) + 1]

+line_y = [slope*x_i + intercept for x_i in line_x]

+

+plt.figure(figsize=(8,8))

+plt.scatter(x,y)

+plt.plot(line_x, line_y, color='red', lw='2')

+

+plt.ylabel('y')

+plt.xlabel('x')

+plt.title('Linear regression via least squares fit')

+

+ftext = 'y = ax + b = {:.3f} + {:.3f}x'\

+ .format(slope, intercept)

+plt.figtext(.15,.8, ftext, fontsize=11, ha='left')

+

+plt.show()

+

Comparing the results from the different implementations

+As mentioned above, let us confirm that the different implementation computed the same parameters (i.e., slope and y-axis intercept) as solution for the linear equation.

+import prettytable

+

+params = [appr(x,y) for appr in [lin_lstsqr_mat, classic_lstsqr, numpy_lstsqr, scipy_lstsqr]]

+

+print(params)

+

+fit_table = prettytable.PrettyTable(["", "slope", "y-intercept"])

+fit_table.add_row(["matrix approach", params[0][0], params[0][1]])

+fit_table.add_row(["classic approach", params[1][0], params[1][1]])

+fit_table.add_row(["numpy function", params[2][0], params[2][1]])

+fit_table.add_row(["scipy function", params[3][0], params[3][1]])

+

+print(fit_table)

+

Initial performance comparison

+For a first impression how the performances of the different least squares implementations compare against each other, let us do a quick benchmark using the timeit module via IPython's %timeit magic.

import timeit

+

+for lab,appr in zip(["matrix approach","classic approach",

+ "numpy function","scipy function"],

+ [lin_lstsqr_mat, classic_lstsqr,

+ numpy_lstsqr, scipy_lstsqr]):

+ print("\n{}: ".format(lab), end="")

+ %timeit appr(x, y)

+

The timing above indicates, that the "classic" approach (Python's standard library functions only) is significantly worse in performance than the other implemenations - roughly by a magnitude of 10.

Compiling the Python code via Cython in the IPython notebook

+Maybe we can speed things up a little bit via Cython's C-extensions for Python. Cython is basically a hybrid between C and Python and can be pictured as compiled Python code with type declarations.

Since we are working in an IPython notebook here, we can make use of the IPython magic: It will automatically convert it to C code, compile it, and load the function.

Just to be thorough, let us also compile the other functions, which already use numpy objects.

%load_ext cythonmagic

+%%cython

+import numpy as np

+import scipy.stats

+cimport numpy as np

+

+def cy_lin_lstsqr_mat(x, y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ X = np.vstack([x, np.ones(len(x))]).T

+ return (np.linalg.inv(X.T.dot(X)).dot(X.T)).dot(y)

+

+def cy_classic_lstsqr(x, y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ x_avg = sum(x)/len(x)

+ y_avg = sum(y)/len(y)

+ var_x = sum([(x_i - x_avg)**2 for x_i in x])

+ cov_xy = sum([(x_i - x_avg)*(y_i - y_avg) for x_i,y_i in zip(x,y)])

+ slope = cov_xy / var_x

+ y_interc = y_avg - slope*x_avg

+ return (slope, y_interc)

+

+def cy_numpy_lstsqr(x, y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ X = np.vstack([x, np.ones(len(x))]).T

+ return np.linalg.lstsq(X,y)[0]

+

+def cy_scipy_lstsqr(x,y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ return scipy.stats.linregress(x, y)[0:2]

+

Comparing the compiled Cython code to the original Python code

+import timeit

+

+for lab,appr in zip(["matrix approach","classic approach",

+ "numpy function","scipy function"],

+ [(lin_lstsqr_mat, cy_lin_lstsqr_mat),

+ (classic_lstsqr, cy_classic_lstsqr),

+ (numpy_lstsqr, cy_numpy_lstsqr),

+ (scipy_lstsqr, cy_scipy_lstsqr)]):

+ print("\n\n{}: ".format(lab))

+ %timeit appr[0](x, y)

+ %timeit appr[1](x, y)

+

As we've seen before, our "classic" implementation of the least square method is pretty slow compared to using numpy's functions. This is not surprising, since numpy is highly optmized and uses compiled C/C++ and Fortran code already. This explains why there is no significant difference if we used Cython to compile the numpy-objects-containing functions.

However, we were able to speed up the "classic approach" quite significantly, roughly by 1500%.

The following 2 code blocks are just to visualize our results in a bar plot.

+import timeit

+

+funcs = ['classic_lstsqr', 'cy_classic_lstsqr',

+ 'lin_lstsqr_mat', 'numpy_lstsqr', 'scipy_lstsqr']

+labels = ['classic approach','classic approach (cython)',

+ 'matrix approach', 'numpy function', 'scipy function']

+

+times = [timeit.Timer('%s(x,y)' %f,

+ 'from __main__ import %s, x, y' %f).timeit(1000)

+ for f in funcs]

+

+times_rel = [times[0]/times[i+1] for i in range(len(times[1:]))]

+%pylab inline

+import matplotlib.pyplot as plt

+

+x_pos = np.arange(len(funcs))

+plt.bar(x_pos, times, align='center', alpha=0.5)

+plt.xticks(x_pos, labels, rotation=45)

+plt.ylabel('time in ms')

+plt.title('Performance of different least square fit implementations')

+plt.show()

+

+x_pos = np.arange(len(funcs[1:]))

+plt.bar(x_pos, times_rel, align='center', alpha=0.5, color="green")

+plt.xticks(x_pos, labels[1:], rotation=45)

+plt.ylabel('relative performance gain')

+plt.title('Performance gain compared to the classic least square implementation')

+plt.show()

+Bonus: How to use Cython without the IPython magic

+IPython's notebook is really great for explanatory analysis and documentation, but what if we want to compile our Python code via Cython without letting IPython's magic doing all the work?

These are the steps you would need.

1. Creating a .pyx file containing the the desired code or function.

+%%file ccy_classic_lstsqr.pyx

+

+def ccy_classic_lstsqr(x, y):

+ """ Computes the least-squares solution to a linear matrix equation. """

+ x_avg = sum(x)/len(x)

+ y_avg = sum(y)/len(y)

+ var_x = sum([(x_i - x_avg)**2 for x_i in x])

+ cov_xy = sum([(x_i - x_avg)*(y_i - y_avg) for x_i,y_i in zip(x,y)])

+ slope = cov_xy / var_x

+ y_interc = y_avg - slope*x_avg

+ return (slope, y_interc)

+

2. Creating a simple setup file

+%%file setup.py

+

+from distutils.core import setup

+from distutils.extension import Extension

+from Cython.Distutils import build_ext

+

+setup(

+ cmdclass = {'build_ext': build_ext},

+ ext_modules = [Extension("ccy_classic_lstsqr", ["ccy_classic_lstsqr.pyx"])]

+)

+

3. Building and Compiling

+!python3 setup.py build_ext --inplace

+4. Importing and running the code

+import ccy_classic_lstsqr

+

+%timeit classic_lstsqr(x, y)

+%timeit cy_classic_lstsqr(x, y)

+%timeit ccy_classic_lstsqr.ccy_classic_lstsqr(x, y)

+